Simulating the multi-armed bandit problem

Machine learning can be split up into many subfields. One of these subfields growing in relevance is reinforcement learning.

One of the core problems in reinforcement learning is the multi-armed bandit problem. This problem has been well studied and is commonly used to explore the tradeoff between exploration and exploitation integral to reinforcement learning.

To illustrate this tradeoff and to visualise different ways of solving the multi-armed bandit problem, I created a simulation using the JavaScript library d3.js.

Click on the below image to view it!

What is the multi-armed bandit problem? Link to heading

Given a number of options to choose between, the multi-armed bandit problem describes how to choose the best option when you don’t know much about any of them.

You are faced repeatedly with n choices, of which you must choose one. After your choice, you are again faced with n choices of which you must choose one, and so on.

After each choice, you receive a numerical reward chosen from a probability distribution that corresponds to your choice. You don’t know what the probability distribution is for that choice before choosing it, but after you have picked it a few times you will start to get an idea of its underlying probability distribution (unless it follows an extreme value distribution, I guess).

The aim is to maximise your total reward over a given number of selections.

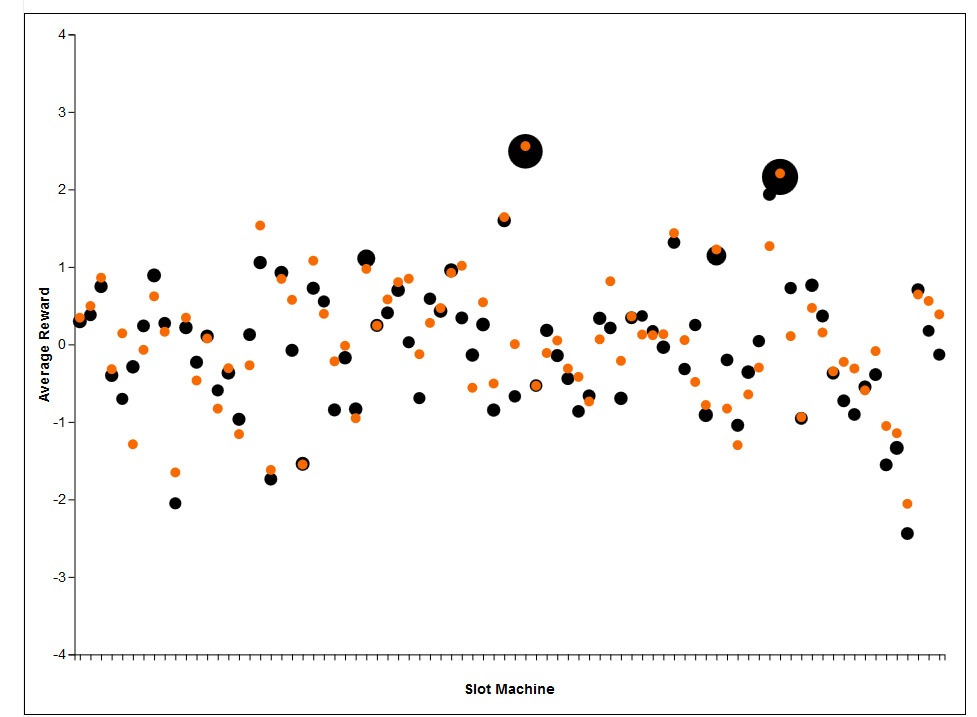

One analogy for this problem is this: you are placed in a room with a number of slot machines, and each slot machine when played will spit out a reward sampled from its probability distribution. Your aim is to maximise your total reward.

If you like, here are three more explanations of the multi-armed bandit problem:

- This article comes in two parts. The first part describes the problem and the second part describes a Bayesian solution.

- The Wikipedia explanation

- A more mathematical introduction

Solving strategies Link to heading

There are many strategies for solving the multi-armed bandit problem.

One class of strategies is known as semi-uniform strategies. These strategies always choose the best slot machine except for a set percentage of the time, where they choose a random slot machine.

Three of these strategies can be easily explored with the aid of the simulation:

Epsilon-greedy strategy: The best slot machine is chosen 1-ε percent of the time, and a random slot machine is chosen ε percent of the time. Implement this by leaving the epsilon slider in one place during a simulation run.

Epsilon-first strategy: Choose randomly for the first k trials, and then after that choose only the best slot machine. To implement this start the simulation with the epsilon slider at 1, then drag it to 0 at some point during the simulation.

Epsilon-decreasing strategy: The chance to choose a slot machine randomly ε decreases over the course of the simulation. Implement this by slowly dragging the epsilon slider towards 0 while the simulation is being run. You can use the arrow keys to decrement it by constant amounts.

There also exist other classes of strategy to solve the multi-armed bandit problem, such as probability matching strategies, pricing strategies and particular strategies that depend on the domain of the application. Also existing are different variants of the multi-armed bandit problem, including non-stationary variants and contextual variants.

I hope you find the simulation useful. Happy banditing!